Research

I am no longer active in academic research.

My research was mainly in Artificial Intelligence, more specifically at the intersection of Computer Vision and Natural Language Processing. I integrated visual and linguistic data to tackle text and image understanding problems. I was one of the very few people working on this niche area when I started my PhD back in 2008.

The general theme behind my research was to investigate intelligent systems that

- learn from no or little data;

- can acquire 'general' knowledge as opposed to those that can only solve a specific problem;

- incorporate multiple modalities concurrently in learning and in making decisions.

I wish I had more time to focus on artificial intelligence research that are more closely aligned to and inspired by human cognition (e.g. cognitive neuroscience, cognitive psychology, philosophy, linguistics). I am actually more interested in how humans learn, and how we can apply this knowledge to improve artificial intelligence (or more specifically general artificial intelligence). I would have also enjoyed working in the field of computational creativity. The things you only figure out in hindsight. Sigh.

History of my research career

Up until I abandoned academic research to move into full-time teaching in 2019, I was a Postdoctoral Researcher (i.e. a 'paper factory worker' trying to get onto the 'academic ladder'). My last postdoctoral position was at Imperial College London, and I was involved in the MultiMT and MMVC projects (both led by Prof. Lucia Specia).

Before moving to Imperial College London, I was a Postdoctoral Researcher at the Department of Computer Science, The University of Sheffield, where I worked on the ERA-NET CHIST-ERA D2K 2011 Visual Sense (ViSen) project, a joint Computer Vision and Natural Language Processing consortium led by Prof. Robert Gaizauskas in Sheffield, and coordinated by Dr Krystian Mikolajczyk from Imperial College London. I spent some memorable years working with our consortium partners in Lyon (France) and Barcelona (Spain), travelling to Europe for our various meetings by the sea and in the mountains. We were ahead of our time working on the task of automatic image captioning (still a relatively new task when I started). Alas we fell prey to the deluge called Deep Learning and could not keep pace with the big guns!

I completed my Ph.D. in 2013 at the School of Computing, University of Leeds. I worked under the supervision of Prof. Katja Markert (from the then Natural Language Processing group) and the late Dr Mark Everingham (Obituary on IEEE) (from the then Vision group). Mark is most known for being the main organiser of the PASCAL Visual Object Challenge (VOC) (find my name in the acknowledgements in the 2010 page!) There is now a prize awarded in honour of him. I am one of his only three Ph.D. students who graduated from Leeds (alas, none of us stayed on in academic research).

Prior to that I worked on my M.Sc. dissertation in 2007 under the supervision of Prof. David Hogg. David basically pioneered object tracking in video back in the 80's (e.g. his journal paper from 1983). I remember him describing how he had to physically carry around stacks of tapes containing video recordings - how times have changed! He was also the one who pushed me into doing a Ph.D. combining Computer Vision and Natural Language Processing when it wasn't even a thing then - what a visionary!

Research highlights

Here are some highlights of some of the more interesting research I have done during my research career, along with some commentaries from me sharing some behind-the-scenes, insider backstories and trivia (many of which are rants). Underneath all the glitz of being a researcher and behind all the fancy academic writings, scientists are really just humans.

Learning Object Recognition from Textual Descriptions

Can we get a system to automatically learn a model of an object category solely from a textual description?

Comments

This is still my proudest work to date (back from my PhD). This was pioneering work at the time (2008). At the time, the only main group doing joint Computer Vision and NLP work was the Vision group at UIUC. In fact, when my paper was being reviewed for BMVC 2009, the program committee even assumed that the paper was from the group (it was a blind reviewing process)! My supervisor/advisor who was at the meeting was probably secretly laughing inside. It got through with an oral presentation (mainly for its novelty) - only the cream of the crop generally gets an Oral at Computer Vision conferences! Unfortunately, my very successful first year of PhD quickly went downhill since then - nothing quite worked after that (hence my lack of publications during my PhD). My PhD basically started like a rocket, and then crashed and burned. My project might have been too ambitious and too ahead of its time.

Relevant papers

Josiah Wang, Katja Markert, Mark Everingham

British Machine Vision Conference (BMVC), 2009

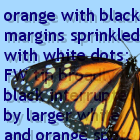

Comments: The main paper from the first year of my PhD. The aim is to figure out how to automatically detect butterflies solely from a textual description of the butterfly from an online field guide. No example images of the butterflies are required! To do this, I extracted information about a butterfly's colours and patterns from the textual description. I then built a spot detector and a colour detector, and used these to detect spots and colours on a previously unseen butterfly image. I then tried to match the detected spots and colours in the image to the closest butterfly model I extracted from the textual descriptions earlier. I also did an interesting experiment where I got people (non-experts) to perform the same task (classify butterfly images solely from a set of textual descriptions). This was my first major conference paper, and as mentioned was presented orally at BMVC 2009 in London.

This paper almost didn't happen. We actually already sort of gave up the day before the deadline as we weren't getting good enough results. Then I relaxed from that decision, and as a result miraculously produced some good results that evening (and even better results after more tweaking). And so, the paper was whipped up in one day the next day (it was a Sunday) - with Mark Everingham basically rewriting the whole paper because I was a useless first year PhD student 🙃 (in Mark's memory, here's a quote as I remembered it: "I am shocked by your literature review") I think towards the end, I managed to write a single paragraph in 3 hours, and I still have no idea how I managed to shorten the number of pages from 11 pages to 12 pages (this is not a typo!) You can probably tell that I only thrive when I'm relaxed! Fortunately, the next day was a bank holiday for me to recuperate.

Josiah Wang, Katja Markert, Mark Everingham

European Conference on Information Retrieval (ECIR), 2016

Comments: This paper is from the final chapter of my PhD thesis. The work from this paper actually came halfway through the third year of my PhD when everything in my original, carefully laid-out plan was not working. After a short nervous breakdown, my supervisors and I hatched a new plan (and a new narrative to go with it). What if we cast the problem of "learning a butterfly category without images" as one of "automatically crawling the Web for images of a butterfly category starting from only its name and textual description"? We can then train a traditional image classifier with these images. That was how this work was born. The main issue was how one can ensure that the images depict the correct butterfly. After lots of trial and error, the solution I came up with was to match the image to its closest text on a web page. And if the closest text is similar to our butterfly description, then we assume that the image is depicting the butterfly! There is then the issue of "finding the closest text". Most solutions I found at the time only analyse the HTML DOM structure to solve this task. I instead went with an ingenious approach to grab information on how the page is rendered by a browser engine. Because isn't that the most obvious approach? So, after lots of automatic page rendering and babysitting, the output was... meh. It works for some cases, but doesn't work for others. But a PhD waits for no one - so I just reported the results as they were.

I only found time to put the conference paper together and get it published THREE WHOLE YEARS after I completed my PhD. Fortunately, the paper was accepted, resulting in me spending a memorable two weeks over Easter in 2016 in Padua visiting my best buddy St. Anthony of Padua, viewing Galileo Galilei's lectern, having Italian coffee for breakfast every morning at the family-run hotel where I was staying, visiting the surrounding region (Venice, Verona, Lake Garda, Treviso, Montagnana, Monselice, Bologna), enjoying gelato and local regional cuisine every day, eating the original tiramisù at Le Beccherie in Treviso, trying out proper Ragù alla Bolognese in Bologna, etc. And attending ECIR of course. Yes, it's all about work! 🙃

Visually Descriptive Language (VDL)

Can we determine what a piece of text says is true by visual sense alone?

Comments

I consider this one of my most interesting and finest piece of work. This is a proper computational linguistics paper. It stemmed from our early attempt at automatic image captioning. To be able to learn how to automatically describe images, we tried to collect some corpora describing an object (say a cat). Unfortunately, most text don't actually describe an object. See, for example, the Wikipedia article for cat. Most of the text do not visually describe a cat. I only found a few relevant sentences among the whole article, for example "Most cats have five claws on their front paws, and four on their rear paws". Which got us thinking - can we actually try to detect what bits of a text is visual? Which led to more thoughts about what it means to be 'visual', etc. In the end, we settled on the idea of 'visually confirmable' as text that allows you to confirm what it says is true using your visual senses alone. Which then led us to try to annotate some visually confirmable text (and finding all kinds of unexpected cases). More details are in the paper and on our full definition and guidelines.

It is a real shame that this work was not fully appreciated by the research community - it has been rejected by two major conferences (ACL2015 and EMNLP2015). We finally submitted it to a workshop as nobody seems interested in our masterpiece. I was particularly triggered by an ACL reviewer who completely misunderstood everything about the paper and at the same time made the loudest noise, resulting in the paper being rejected from ACL despite the other two reviewers being supportive (as there was no chance for a rebuttal). One reviewer who actually understood the paper actually gave us a 5 (out of 5). The reviewers at EMNLP on the other hand understood the paper, but thought that it was too preliminary and demanded perfection and asked for the earth, moon, sky and stars. We retaliated with the fact that EMNLP defined short papers as those with "a small, focused contribution" or "work in progress". Goes to show how difficult it is to push something completely radical but intellectually stimulating, and not in line with all the trendy but boring incremental work that everybody else is doing. Sigh.

The good thing about people not being interested in your work means that there is no competition! So you can take your own sweet time to develop your idea further (or not).

Relevant papers

Robert Gaizauskas, Josiah Wang, Arnau Ramisa

Workshop on Vision and Language (VL'15) @ EMNLP, 2015

Comments: The first paper that presents the whole idea on visually descriptive language, and also defines it. This is really just a summary because of the page limit, but the full definition and guidelines can be found at the project webpage.

It was quite rare for a busy professor (Rob) to be so hands on and involved in the work nowadays, but his linguistics and philosophical background gave a lot of credibility to the definition (the remaining two of us are more linguistically challenged - what in the world is a counterfactual?) All three of us also annotated two chapters from the Wonderful Wizard of Oz - can't quite remember why this was chosen apart from it being public domain. The two of us (Arnau and I) also annotated a small sample of the Brown corpus - Rob skipped this because he was too busy with his other professor-ly commitments.

Tarfah Alrashid, Josiah Wang, Robert Gaizauskas

15th Joint ACL-ISO Workshop on Interoperable Semantic Annotation (ISA-15), 2019

Comments: Since the first paper, we have managed to get two paid volunteers to annotate a few more chapters from The Wonderful Wizard of Oz. Rob's PhD student Tarfah then went ahead and experimented with basic machine learning algorithms on the dataset.

Admittedly, the dataset is still tiny (and is obviously biased towards on a single story). Annotating more of such datasets is also tedious work. Trying to solve the problem this way is probably not the way forward if you want a more generalised visual descriptive language detector.

Predicting Prepositions in Image Descriptions

Predict the preposition that best expresses the relation between two visual entities in an image.

Relevant paper

Arnau Ramisa*, Josiah Wang*, Ying Lu, Emmanuel Dellandrea, Francesc Moreno-Noguer, Robert Gaizauskas

(* = equal contribution)

Empirical Methods in Natural Language Processing (EMNLP), 2015

Comments: The task is to automatically predict the preposition that connects a subject to an object, e.g. person on boat. In the paper, I used the terms 'trajector' and 'landmark' borrowed from the spatial role labelling task. I slightly regret the choice now as I found the terms confusing. The key idea we presented is that we can also add images as context to help with predicting prepositions. Without an image as context, you can only guess whether a person is on the boat or by the boat. Having an image will give you more certainty about the prediction.

We presented two settings. The first is an easier setting where you know what the subject and object are (person? cat?) and where they are located in the given image. Your task is to predict the preposition. The second setting is slightly harder: you do not know what the subjects and objects are, you are only given the bounding boxes around them in the image. Your task is to predict the category label for the subject and object as well as the preposition.

We automatically generated the preposition dataset from existing image captioning datasets. One main issue we found is that the dataset is pretty much biased towards the preposition "with" (because you can pretty much use it anywhere and get away with it!) So, use this dataset with caution!

Comments

This piece of work actually happened in parallel with the Visually Descriptive Language (VDL) work above. It was the result of a tight collaboration between our consortium partners École Centrale de Lyon and Institut de Robòtica i Informàtica industrial, Universty of Catalonia (Barcelona). We even spent a productive week working together in École Centrale de Lyon (the food capital of France), staying at the student dorms and eating French food (occasionally feasting at a French bouchon). This was also the time when I found that even a supermarket (Carrefour) serves good food - it was the closest place to eat outside of campus since the campus is located out of town!

Anyway, this paper came during the onslaught of deep, end-to-end CNN-RNN based methods for generating image captions that seemingly popped up overnight. We decided to stick with a simpler, old-fashioned, linguistically sound direction. If you need to generate a sentence, you will need to know what prepositions to use correctly. So we tackled this very small subset of the automatic image captioning problem instead. We were pretty sure the big guns are not interested in such a small problem! Everyone wants to tackle the sparkly super-challenging problems! I have a natural tendency to NOT follow the crowd.

This is actually only half of the original paper. There is supposed to be a first half which was never completed even till this day (I actually like what was supposed to be the first part better). For pragmatic reasons (i.e. it didn't work!), we cut the full paper into half and submitted it as a short paper. I personally thought the VDL paper was the stronger paper when we submitted both to EMNLP2015. Surprisingly, it was this paper that got the reviewers "ooh ahh"-ing. Mostly because of how well we managed to market the 'large-scale' and 'natural text' aspects of the paper. In any case, my reward is my first visit to Lisbon and enjoying Pastéis de Nata and other lovely Portuguese pastries for breakfast/brunch every day. After sampling so many pastéis de nata around Lisbon (including that famous one in Belém), my favourite is the one from Manteigaria.

MultiSubs

A multilingual subtitle corpus with words illustrated with images.

Relevant papers

LREC, 2022: Josiah Wang, Josiel Figueiredo, Lucia Specia

arXiv, 2021: Josiah Wang, Pranava Madhyastha, Josiel Figueiredo, Chiraag Lala, Lucia Specia

Comments: The arXiv version contains all our full details and experiments. The LREC version focusses only on the dataset part, and also introduces the first fill-in-the-blank task along with some simple baselines. It is sufficient to read the arXiv version - the LREC version does not add anything new to the arXiv version.

Comments

This piece of work has a sad backstory. I started work on this since July 2016 when I started working with Lucia on her MultiMT project. It has been rejected from 5-6 conferences/journals between then till 2020. By then I have had enough of academic research, am already on a full-time teaching role and doing something more meaningful than generating papers, and have neither the time, energy nor motivation to continue working on this. The whole team is also no longer actively working on the project. We finally just put the paper up on arXiv since the research community does not seem interested. Eventually, we decided to just submit the dataset portion of the paper to LREC 2022 which appreciates and encourages interesting ideas much better than the more adversarial and competitive mainstream conferences/journals whose job is simply to tear down people's hard work.

At the time this work started, Lucia had just kicked off the field of multimodal machine translation, where her team took an existing image captioning dataset (Flickr30k) and hired professional translators to manually translate the captions to different languages. These datasets were small, the captions were pretty contrived and were constrained to a fixed domain (arguably not useful for real world applications), and it was hard to scale translation to more languages (manual translation is not cheap!) We thought that a more effective way to gather a new multimodal and multilingual dataset was to piggyback on an existing multilingual movie subtitle corpus and then just find images for these, solving all the problems mentioned above. This sounded clear, until we found issues in determining whether an image actually illustrates the text. For example, will an image of a red car be sufficient to illustrate "a red car with a black interior"? What about an image with a red car with some white interior visible? To solve this, we ended up with the notion of "similar", "partially similar" and "not similar". It all felt very convoluted and contrived, and our (rushed) first version of the paper was rightfully rejected.

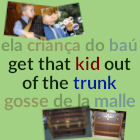

I went back to the drawing board after that. It became quite clear that we cannot have a single image to illustrate a whole piece of text. The more obvious and intuitive way is to illustrate fragments of the text (words or phrases) individually, as how you would visualise them when reading a sentence (you might visualise in your mind a red car when reading "the red car", and then visualise a black interior separately when you reach the "black interior" part of the sentence). Or you can go even more fine-grained and visualise "red" and "car" separately too. The new version of the paper is based on this intuition. So now an image does not need to illustrate the whole sentence, but only small fragments of the text. This also makes it easier to find more example images (since the fragment more general), and the model will be more generalisable for image grounding tasks. Of course, I also had the ingenious idea of exploiting the parallel multilingual corpora to disambiguate the sense of words (does "trunk" refer to a tree trunk or a wooden chest? Let's check what it says in a different language!) This should have been a ground-breaking paper! 💥💥

The bit that I enjoyed most is setting up our Gap Filling game and getting volunteers to play it. I remember that a volunteer actually commented it was one of the most fun task they have done. I think it is definitely more fun than trying to train an ML system to perform the task!

Unfortunately, despite the brilliant idea, the paper just kept getting rejected. Updates after updates, the paper morphed into a monstrous patchwork that I no longer recognised. While there was generally good support for the dataset and the human gap filing game, the criticism were mainly targetted at the tasks, the models and the experiments. As mentioned, by the end of it, we just did not have the resources (or motivation) to continue trying to come up with a new model, improve the models or perform more experiments. Honestly, gathering a new large-scale dataset and coming up with two tasks is hard enough - are we also expected to solve both tasks perfectly too? In a single paper? It was all meant to be a baseline! In any case, what you see on arXiv are the remnants of our experiments back in 2017-2018 (and slightly tweaked in early 2019), hence the lack of transformer-based models. I instead went the other way and did some simple n-gram based models which arguably performed even better than the neural models we had. It should have been the FIRST thing to try! Reviewers seem optimistic that the latest transformer models will work astoundingly well. I will leave it to someone to test this out. I have honestly reached the point where I am no longer excited by yet another BERT variant!

Zero Shot Phrase Localization

How can we get a system to automatically localize where a given phrase is depicted in an image? And without any paired training examples?

Relevant papers

Josiah Wang, Lucia Specia

International Conference on Computer Vision (ICCV), 2019

Comments

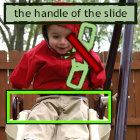

The task of phrase localization is to identify where in the image is a given phrase depicted (the handle of the slide). At the time of this paper, most models were becoming more and more complex, but all they were really doing is to 'memorise' lots of given examples of phrases and their location in images, and try to score well in their 'exam' (evaluation).

I am naturally lazy, which is why my research tends to be about "learning from few or no examples" (life is too short to be training with large data and tweaking hyperparameters!) I also like to keep things simple. Seeing that other people have already done the hard work of training decent object detectors, I decided to just ride on their coattails. Let's just look for the objects mentioned in the phrase using existing detectors! I do not even need to do any machine learning or training! This is closer to how a person would perform the same task (you already know what a "handle" and a "slide" look like, and will try to find these in the image). Surprisingly, this simple method actually performs just as well as existing methods that are more complex and rely on many task-specific training examples.

The main message I wanted to bring across is that simple methods may sometimes work as well as complicated methods. We should not blindly use complicated methods without first really understanding the dataset or the task itself. Might be better to start simple sometimes! Also, getting good scores sometimes really just means that your system is simply good at solving the dataset, not the task (and definitely not 'solving artificial intelligence' or becoming 'superhuman')!

Looking back, this paper actually began from when I proposed and led a discussion on a paper on localising and generating referring expressions at our reading group in Sheffield. I remember someone commenting that it was the best paper we have discussed in a while! That discussion also triggered ideas for Lucia, culminating in her leading a summer research team at the JSALT 2018 workshop in Baltimore, USA. This particular ICCV 2019 paper is an offshoot of our region-specific machine translation subgroup of our team.

This paper was initially rejected from CVPR 2019. We named our method "truly unsupervised" at the time, and the reviewers were not happy with that. We chose the term because a few papers at the time claimed to be unsupervised when they were not actually unsupervised (Rohrbach et al., Yeh et al.). How did these pass as 'unsupervised' when ours was even less supervised? I was extremely annoyed with one reviewer in particular (this was only the second time I was annoyed with a reviewer! See the comments for 'VDL' above) The review included: "Paper contains technical or experimental errors... When there is no training it does not even make sense to talk about supervision ... The method is not unsupervised because it uses pre-trained detectors ... Comparing this approach with ... weak-supervision ... 'strong' supervision ... unfair ... I think the authors should rethink their concept of supervision". Here is a sample of my response from my rebuttal: "When there is no training, it makes perfect sense to talk about no supervision and unsupervised methods ... By your argument, pre-trained CNNs should not be allowed for any unsupervised tasks since they are strongly supervised ... Supervised models should generally perform better, so we can only have been unfair against ourselves. The fact that our method is competitive is a major contribution in itself". My advice: don't try to be a know-it-all unless you really know that you know it all. Another tip: see how I used the criticisms to my advantage?

We duly changed the title of the paper to "zero-shot phrase localization" for ICCV 2019, which I thought was perfect! Unfortunately, this term also caused some confusion among the reviewers because they kept comparing it to zero-shot learning, a different setting altogether. In the end, we adopted a reviewer's suggestion - "without paired training examples". I personally thought this was a bit of a mouthful and less catchy than "zero-shot". In any case, to add salt to the wound, another paper presented shortly after mine actually used the term "zero-shot" for the exact same setting as mine! The area chair (whom I spoke to in private during the conference) also liked my original "zero-shot" better and did not think it needed to be changed. Argh! The moral of the story - take your reviewers' comments with a pinch of salt! In any case, we fortunately had at least one super-supportive reviewer and a supportive area chair. So the paper was accepted for an oral presentation (please allow me to boast the 4.3% oral acceptance rate!)

This was also officially my last publication as a postdoctoral researcher. I was to begin my new role as a Teaching Fellow after returning from ICCV 2019 in Seoul (or rather, after an extended holiday in Korea after ICCV).

I also unlocked a new achievement with this paper - I now officially have at least one paper at all major CV and NLP top-tier conferences (CVPR, ICCV, BMVC, ACL, EMNLP and NAACL). The only conference missing is ECCV, but I'll pretend that ICCV and ECCV are equivalent since they occur in alternate years!

Publications

Here is my obligatory publication list, sorted by year.

-

2022

-

MultiSubs: A Large-scale Multimodal and Multilingual Dataset

Josiah Wang, Josiel Figueiredo, Lucia Specia

LREC, 2022

-

-

2021

-

MultiSubs: A Large-scale Multimodal and Multilingual Dataset

Josiah Wang, Pranava Madhyastha, Josiel Figueiredo, Chiraag Lala, Lucia Specia

arXiv, 2021 -

Read, Spot and Translate

Lucia Specia, Josiah Wang, Sun Jae Lee, Alissa Ostapenko, Pranava Madhyastha

Machine Translation, 2021Download: [ Paper (Springer) | BibTeX ]

-

-

2020

-

Grounded Sequence to Sequence Transduction

Lucia Specia, Loic Barrault, Ozan Caglayan, Amanda Duarte, Desmond Elliott, Spandana Gella, Nils Holzenberger, Chiraag Lala, Sun Jae Lee, Jindrich Libovicky, Pranava Madhyastha, Florian Metze, Karl Mulligan, Alissa Ostapenko, Shruti Palaskar, Ramon Sanabria, Josiah Wang, Raman Arora

IEEE Journal of Selected Topics in Signal Processing (JSTSP), 2020Download: [ Official IEEE Xplore version | BibTeX ]

-

-

2019

-

Phrase Localization Without Paired Training Examples

Josiah Wang, Lucia Specia

International Conference on Computer Vision (ICCV), 2019Download: [ Paper | Supplementary document | Oral Presentation @ ICCV | Presentation Slides | Poster | Evaluation code | BibTeX ] -

VIFIDEL: Evaluating the Visual Fidelity of Image Descriptions

Pranava Madhyastha*, Josiah Wang*, Lucia Specia (* = equal contribution)

Association for Computational Linguistics (ACL), 2019 -

Automatic Image Annotation at ImageCLEF

Josiah Wang, Andrew Gilbert, Bart Thomee, Mauricio Villegas

Information Retrieval Evaluation in a Changing World: Lessons Learned from 20 Years of CLEF, 2019Download: [ Paper (Springer) | BibTeX ] -

Annotating and Recognising Visually Descriptive Language

Tarfah Alrashid, Josiah Wang, Robert Gaizauskas

15th Joint ACL-ISO Workshop on Interoperable Semantic Annotation (ISA-15), 2019 -

Predicting Actions to Help Predict Translations

Zixiu Wu, Julia Ive, Josiah Wang, Pranava Madhyastha, Lucia Specia

The How2 Challenge: New Tasks for Vision & Language, Workshop @ ICML2019 -

Transformer-based Cascaded Multimodal Speech Translation

Zixiu Wu, Ozan Caglayan, Julia Ive, Josiah Wang, Lucia Specia

International Workshop on Spoken Language Translation (IWSLT), 2019

-

-

2018

-

Visual and Semantic Knowledge Transfer for Large Scale Semi-supervised Object Detection

Yuxing Tang, Josiah Wang, Xiaofang Wang, Boyang Gao, Emmanuel Dellandrea, Robert Gaizauskas, Liming Chen

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 2018 -

Object Counts! Bringing Explicit Detections Back into Image Captioning

Josiah Wang, Pranava Madhyastha, Lucia Specia

North American Chapter of the Association of Computational Linguistics: Human Language Technologies (NAACL HLT), 2018 -

End-to-end Image Captioning Exploits Multimodal Distributional Similarity

Pranava Madhyastha, Josiah Wang, Lucia Specia

British Machine Vision Conference (BMVC), 2018 -

Defoiling Foiled Image Captions

Pranava Madhyastha, Josiah Wang, Lucia Specia

North American Chapter of the Association of Computational Linguistics: Human Language Technologies (NAACL HLT), 2018 -

The Role of Image Representations in Vision to Language Tasks

Pranava Madhyastha, Josiah Wang, Lucia Specia

Natural Language Engineering, 2018

-

-

2017

-

Sheffield MultiMT: Using Object Posterior Predictions for Multimodal Machine Translation

Pranava Madhyastha*, Josiah Wang*, Lucia Specia (* = equal contribution)

Second Conference on Machine Translation (WMT), 2017 -

Unraveling the Contribution of Image Captioning and Neural Machine Translation for Multimodal Machine Translation

Chiraag Lala, Pranava Madhyastha, Josiah Wang, Lucia Specia

European Association for Machine Translation (EAMT), 2017

-

-

2016

-

Large Scale Semi-supervised Object Detection using Visual and Semantic Knowledge Transfer

Yuxing Tang, Josiah Wang, Boyang Gao, Emmanuel Dellandrea, Robert Gaizauskas, Liming Chen

Computer Vision & Pattern Recognition (CVPR), 2016 -

Harvesting Training Images for Fine-Grained Object Categories using Visual Descriptions

Josiah Wang, Katja Markert, Mark Everingham

European Conference on Information Retrieval (ECIR), 2016 -

Don't Mention the Shoe! A Learning to Rank Approach to Content Selection for Image Description Generation

Josiah Wang, Robert Gaizauskas

International Natural Language Generation Conference (INLG), 2016 -

Overview of the ImageCLEF 2016 Scalable Concept Image Annotation Task

Andrew Gilbert, Luca Piras, Josiah Wang, Fei Yan, Arnau Ramisa, Emmanuel Dellandrea, Robert Gaizauskas, Mauricio Villegas, Krystian Mikolajczyk

CLEF2016 Working Notes, CEUR Workshop Proceedings, CEUR-WS.org, 2016 -

General Overview of ImageCLEF at the CLEF 2016 Labs

Mauricio Villegas, Henning Müller, Alba Gracía Seco de Herrera, Roger Schaer, Stefano Bromuri, Andrew Gilbert, Luca Piras, Josiah Wang, Fei Yan, Arnau Ramisa, Emmanuel Dellandrea, Robert Gaizauskas, Krystian Mikolajczyk, Joan Puigcerver, Alejandro H. Toselli, Joan-Andreu Sánchez, Enrique Vidal

Experimental IR Meets Multilinguality, Multimodality, and Interaction

Lecture Notes in Computer Science (vol 9822), 2016 -

Cross-validating Image Description Datasets and Evaluation Metrics

Josiah Wang, Robert Gaizauskas

Language Resources and Evaluation Conference (LREC), 2016 -

SHEF-Multimodal: Grounding Machine Translation on Images

Kashif Shah, Josiah Wang, Lucia Specia

First Conference on Machine Translation (WMT), 2016

-

-

2015

-

Arnau Ramisa*, Josiah Wang*, Ying Lu, Emmanuel Dellandrea, Francesc Moreno-Noguer, Robert Gaizauskas (* = equal contribution)

Combining Geometric, Textual and Visual Features for Predicting Prepositions in Image Descriptions

Empirical Methods in Natural Language Processing (EMNLP), 2015 -

Defining Visually Descriptive Language

Robert Gaizauskas, Josiah Wang, Arnau Ramisa

Workshop on Vision and Language (VL'15) @ EMNLP, 2015 -

Generating Image Descriptions with Gold Standard Visual Inputs: Motivation, Evaluation and Baselines

Josiah Wang, Robert Gaizauskas

European Workshop on Natural Language Generation (ENLG), 2015* There was a bug in our original implementation of the visual prior based on bounding box position. Please refer to the errata for more details.

-

Overview of the ImageCLEF 2015 Scalable Image Annotation, Localization and Sentence Generation Task

Andrew Gilbert, Luca Piras, Josiah Wang, Fei Yan, Emmanuel Dellandrea, Robert Gaizauskas, Mauricio Villegas, Krystian Mikolajczyk

CLEF2015 Working Notes, CEUR Workshop Proceedings, CEUR-WS.org, 2015 -

General Overview of ImageCLEF at the CLEF 2015 Labs

Mauricio Villegas, Henning Müller, Andrew Gilbert, Luca Piras, Josiah Wang, Krystian Mikolajczyk, Alba G. Seco de Herrera, Stefano Bromuri, M. Ashraful Amin, Mahmood Kazi Mohammed, Burak Acar, Suzan Uskudarli, Neda B Marvasti, José F. Aldana, María del Mar Roldán García

Experimental IR Meets Multilinguality, Multimodality, and Interaction

Lecture Notes in Computer Science (vol 9283, pp 444-461), 2015

-

Arnau Ramisa*, Josiah Wang*, Ying Lu, Emmanuel Dellandrea, Francesc Moreno-Noguer, Robert Gaizauskas (* = equal contribution)

-

2014

-

A Poodle or a Dog? Evaluating Automatic Image Annotation Using Human Descriptions at Different Levels of Granularity

Josiah K. Wang, Fei Yan, Ahmet Aker, Robert Gaizauskas

Workshop on Vision and Language (VL'14) @ COLING, 2014

-

-

2013

-

Learning Visual Recognition of Fine-grained Object Categories from Textual Descriptions

Josiah Wang

Ph.D. Thesis, 2013Download: [ BibTeX ]

-

-

2009

-

Learning Models for Object Recognition from Natural Language Descriptions

Josiah Wang, Katja Markert, Mark Everingham

British Machine Vision Conference (BMVC), 2009

-

-

2007

-

Representation and Recognition of Compound Spatio-temporal Entities

Josiah Wang

M.Sc. Thesis, 2007Download: [ BibTeX ] -

Which English dominates the World Wide Web, British or American?

Eric Atwell, Junaid Arshad, Chien-Ming Lai, Lan Nim, Noushin Rezapour Asheghi, Josiah Wang, Justin Washtell

Corpus Linguistics, 2007

-